2 Training (10 minutes)

This guide covers local model training for CARLA Leaderboard.

Note

This tutorial is specific to training policies for CARLA Leaderboard. For cross-dataset training (NAVSIM, Waymo), see Cross-dataset training. For large-scale training on HPC clusters, refer to the SLURM Training Guide.

2.1 Prerequisites

The following steps need to be performed once per dataset.

2.1.1 Prepare Data

This step assumes you either

Fellow the data collection tutorial to collect data locally

Or downloaded the data from Hugging Face.

Eitherway, your data structure should match:

data/carla_leaderboard2

├── data

│ └── BlockedIntersection

│ └── 999_Rep-1_Town06_13_route0_12_22_22_34_45

2.1.2 Build Data Buckets

Buckets group training samples by characteristics (scenarios, towns, weather, road curvature, etc.) to enable curriculum learning and balanced batch sampling.

Default bucket collections:

Pre-training: lead/data_buckets/full_pretrain_bucket_collection.py samples uniformly across all available data

Post-training: lead/data_buckets/full_posttrain_bucket_collection.py filters out the initial and final samples of each sequence (which may contain initialization artifacts) and samples uniformly from the remaining data

Buckets are built once, stored on disk, and automatically reused in subsequent runs.

Build pre-training buckets:

1python3 scripts/build_buckets_pretrain.py

Build post-training buckets:

1python3 scripts/build_buckets_posttrain.py

Expected output structure:

data/carla_leaderboard2

├── buckets

│ ├── full_posttrain_buckets_8_8_8_5.gz

│ └── full_pretrain_buckets.gz

├── data

│ └── BlockedIntersection

│ └── 999_Rep-1_Town06_13_route0_12_22_22_34_45

Bucket files use relative paths and are portable across machines.

Note

Buckets contain only the data samples available at build time. Rebuild buckets after adding or removing routes.

Note

If you encounter hard-to-debug errors after major code changes, try deleting and rebuilding the buckets.

2.1.3 Build Persistent Data Cache

Raw sensor data (images, LiDAR, RADAR) requires preprocessing—decompression, format conversion, and temporal alignment. The training cache stores preprocessed, compressed data on disk, eliminating redundant computation and accelerating data loading. Once built, the cache is reused across all training runs.

Two cache types:

persistent_cache: Stored alongside the dataset, reused across all training sessions. See PersistentCachetraining_session_cache: Temporary cache on local SSD during cluster jobs, implemented with diskcache. During the first few epochs, data is loaded from shared storage and cached on the job’s local SSD for faster subsequent access. This implementation is specific to our organization’s SLURM cluster setup.

Build the persistent cache:

1python3 scripts/build_cache.py

Expected output structure:

data/carla_leaderboard2

├── buckets

│ ├── full_posttrain_buckets_8_8_8_5.gz

│ └── full_pretrain_buckets.gz

├── cache

│ └── BlockedIntersection

│ └── 999_Rep-1_Town06_13_route0_12_22_22_34_45

├── data

│ └── BlockedIntersection

│ └── 999_Rep-1_Town06_13_route0_12_22_22_34_45

Training session cache is loaded in the first few epochs of the training from shared disk and stored on training session’s disk. This implementation is specific to SLURM clusters of our organization.

Note

Rebuild the cache after modifying the data pipeline (e.g., adding new semantic classes or changing preprocessing steps).

2.2 Perception Pre-training

Following standard TransFuser-like training procedures, training occurs in two phases: first, train only the perception backbone, then train the complete model end-to-end.

1python3 lead/training/train.py logdir=outputs/local_training/pretrain

Training takes approximately 1-2 minutes if trained with only one route and produces:

outputs/local_training/pretrain

├── config.json

├── events.out.tfevents.1764250874.local.105366.0

├── gradient_steps_skipped_0030.txt

├── model_0030.pth

├── optimizer_0030.pth

├── scaler_0030.pth

└── scheduler_0030.pth

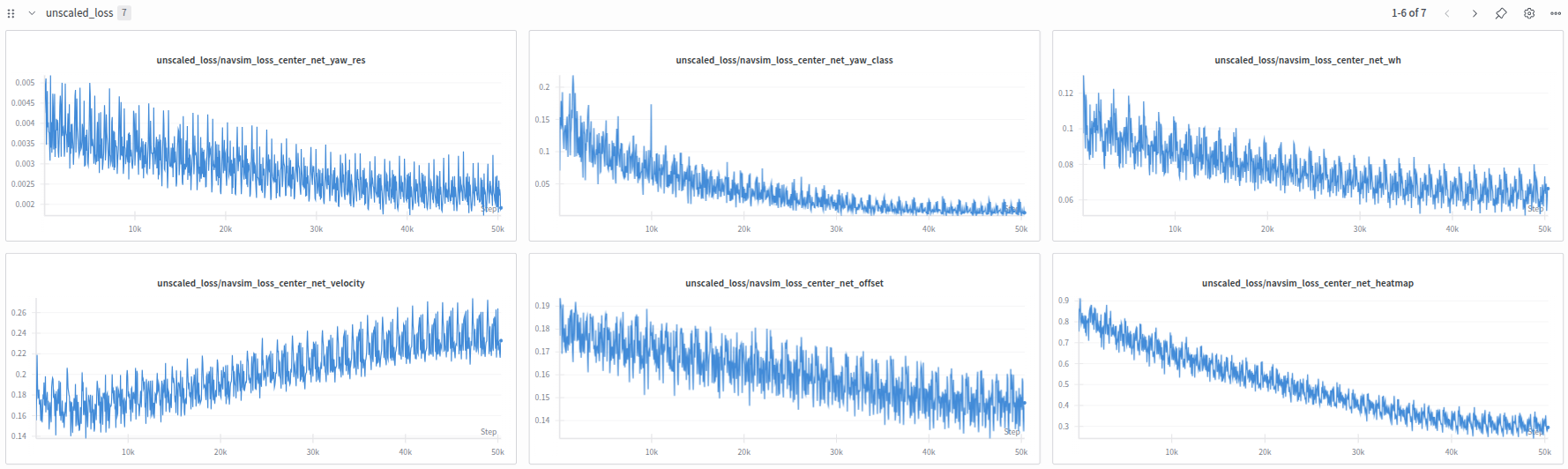

The training script generates WandB/TensorBoard logs and visualization images at outputs/training_viz. Control logging frequency with log_scalars_frequency and log_images_frequency in lead/training/config_training.py.

Image logging runs at least once per epoch and can be expensive. Disable it by setting visualize_training=false in the config.

View training logs with TensorBoard:

1tensorboard --logdir outputs/local_training/pretrain

WandB logging is also supported. Enable it by setting log_wandb=true in the training config.

2.3 Planning Post-training

Note

During post-training, the epoch count resets to 0. The optimizer state is reinitialized because the planner was not included in the pre-training checkpoint.

After pre-training completes, continue with post-training to add the planner and train the complete model end-to-end:

1python3 lead/training/train.py logdir=outputs/local_training/posttrain load_file=outputs/local_training/pretrain/model_0030.pth use_planning_decoder=true

2.4 Resume Failed Training

To continue from a failed training run, set continue_failed_training=true in the training config.

2.5 Distributed Training

The pipeline supports Torch DDP. See example scripts:

2.6 Common Issues

2.6.1 CARLA Server Running in Background

This error may occur:

1RuntimeError: cuDNN error: CUDNN_STATUS_INTERNAL_ERROR

This typically indicates a CARLA server is consuming VRAM in the background. Kill all CARLA processes:

1bash scripts/clean_carla.sh

2.6.2 Unknown GPU Name: <gpu_name>

Register your GPU in the training configuration:

Step 1: Add your GPU name to the gpu_name function in lead/training/config_training.py.

Step 2: If your GPU supports bf16 (bfloat16), add it to both use_mixed_precision_training and use_gradient_scaler functions in the same file.

Why explicit registration? Mixed precision training (BF16) is opt-in rather than automatic. On some older GPUs like RTX 2080 Ti, BF16 can degrade training performance, so we require explicit configuration.

2.6.3 Unknown CARLA Root Path: <carla_root>

Register your CARLA dataset configuration in the target_dataset function in lead/training/config_training.py. Map your CARLA root path to the appropriate dataset configuration, which specifies the expected sensor setup and data format.

Why this design? This allows running training experiments while simultaneously collecting data with different sensor configurations. The target_dataset in lead/expert/config_expert.py controls which sensors are mounted on the expert vehicle during data collection.